I’m Lucky

2020 has forced me, like many others, to reflect on my own privilege and why I’ve been able to accomplish what I have.

I want to show gratitude towards the folks who helped propel my career where it is today. I had/have so many things going for me, and these people played a bigger part than most. If you find yourself stuck in your career, maybe you can find some inspiration here from a lesson that someone taught me. Who in your life can play a part like these?

Coach Condon – Making things happen for so many of us.

John, Tristan, Wade, Tom, Emma – Thanks for taking a chance on me!

Konrad, Vicky, Lev, Muness – You have taught me so much.

Richard – Starting our first business together.

Alyson and Sam – Everyone needs family friends like you in their life.

Emilie, Ally, Stephanie, Simon – Thanks for the continuing amazing peer-learning.

Lindsey – Thanks for the love, support, and health!

If you narrow in on the people who took a chance and influenced a step-change in my career arc, it’s sobering how many times there are personal relationships in addition to my own hustle. While I created some of this momentum on my own, I had the confidence to do so because of all of the rest of the times where people like this helped make sure that things worked out for me.

I’m offering free coaching for underrepresented folks in tech

Even if I take for granted that I’m good at what I do, without these people (and so many more) willing to believe in me at various points in my career, I wouldn’t be where I am. I’m certain that there are great people out there who haven’t been given those chances, and that’s why I want to try to pay it forward by making some of my coaching free.

Who helped you get where you are today? Who’s the best person you know who hasn’t been given that chance yet? Should you recommend them to work with me for free?

Appendix – More detailed gratitude – Who does these things for you?

- Jim (Coach) Condon – there’s likely no businessperson with a bigger impact on my career arc than Coach Condon. I still remember him giving a career day presentation about CyberCash (think some pre-alpha version of PayPal crossed with Bitcoin) and just the general knowledge of business that I picked up spending time at their house. Even as a kid, I had a sense that I might want a career like his one day: rarely a founder, but serial executive. It gave an interesting target for thinking about what I could accomplish and drove me to consider what a path like that might look like. Obviously someone doesn’t just “become an executive” so I had to learn to think backwards from there. Plus, he personally got me my first two amazing jobs in high school, and directly influenced a job or two later than that. I’m confident in saying that without Coach Condon, my career would look completely different.

- John Bracken – When I decided that I wanted to get back into tech/startups, I knew that I had the skills and passion for it, but getting that first transition job is always a challenge. By encouraging me to start as a side project (he didn’t even know that I was going to suggest that to him!) he gave me the chance to prove it.

- Konrad Waliszewski – While John gave me my shot at Speek, Konrad showed me the type of leader that I want to be. To put it simply, I know that I’m not alone as a former coworker who hopes to get a chance to work with Konrad again.

- Vicky Volvovski – I’ve never seen anything like Vicky’s career arc at Zapier. While we bonded over both having to apply 3 times before getting hired at Zapier, it is our working relationship that has taught me so much. Knowing that someone is good enough at “getting stuff done” to go from blog freelancer to the Zapier exec team is an inspiration. Her approach to solving problems and thinking about the business is something that I’ll continue to apply regularly. And if we could all just get stuff done like she can, there’s no limit to what a team can accomplish.

- Lev Volftsun – I knew from high school how successful of a businessperson Lev was, so I wanted to work for him just to learn how he thought about running a company. Working for him taught me the power of expectations in business: both for yourself, and also for others.

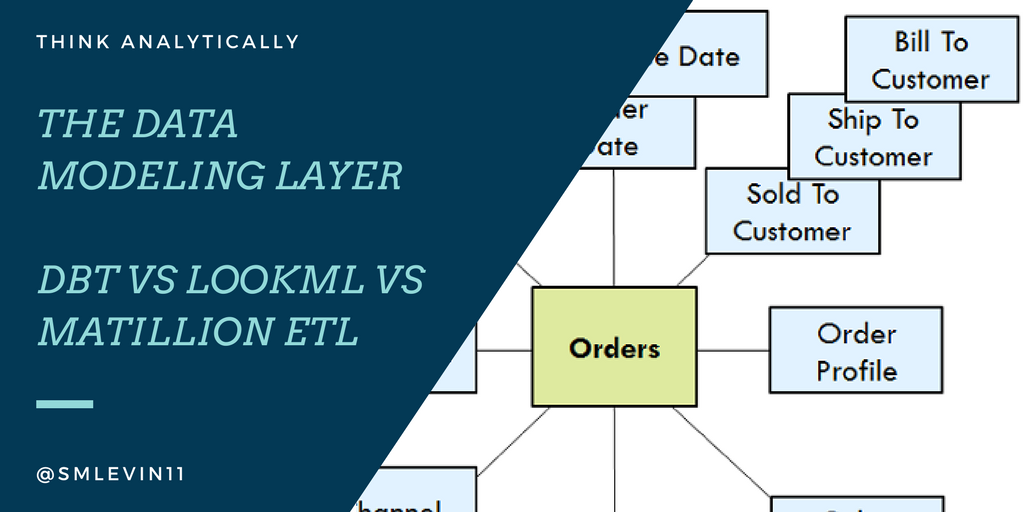

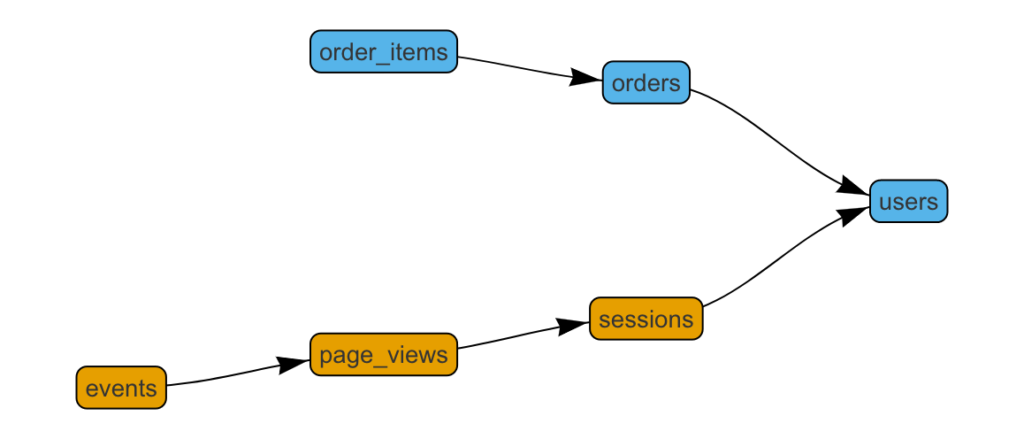

- Tristan Handy – In Fishtown’s very early days, Tristan was turning down work because his folks were too busy. Dbt was brand new and in one of the only cases I can remember, I was completely uncertain whether or not I could pick up some of that work. He convinced me to take on a short contract stint with them anyway, and said that I’d be fine. I know that the data modeling skills I worked on during my time there influenced my ability to get later jobs.

- Wade Foster – While I work for Wade now, that wasn’t how my time at Zapier started. Wade took a phone call off of a cold email and stayed in communication over a number of months before we found the right fit for me at Zapier on the data team. It only took 3 deep interview processes! 😂 Even after I was working on the data team, it was not necessarily obvious to create the role for me working for him that he did, and I still remember his quote the day we decided, “I’m still not sure this is going to work, but I’d like to give it a shot.” I hope that choice continues to pay dividends for both of us!

- Muness Castle – My first Zapier manager, helped ensure that I was able to work on projects that helped me grow and show off the skills I’d need later. Without some of those assignments and opportunities, it’s not certain that I’d have gotten the later roles that I did.

- Emma Candelier – I wasn’t completely sure how I was going to wrap up my last year at UVA; I was considering a 5 year MA in Math, but I knew I liked business, too. Talking through my options for MS Commerce with Emma set me up exactly where I wanted to be coming out of school.

- Richard Kim (and his parents) – The best man at my wedding and also my first business partner. Without our tiptoes into tutoring businesses before and after college, I’m not sure when I would have incorporated my first company. His parents’ company was also a success story that guided both of us towards business, but also served as general inspiration for what success can look like.

- Sam and Alyson Rod – In addition to being our closest family friends and a core part of my personal support system growing up, knowing that they “ran their business from the basement” made owner-entrepreneurship a completely normal part of my childhood. Plus, Alyson embodied the type of adult and parent that I still aspire to be. I wish everyone had someone like her in their life, and I’m so grateful that I do.

- Emilie Schario, Ally Hendrickson, Stephanie Couzin, Simon Ouderkirk – Haven’t had a chance to work together with you all in the same depth that I have with most of the folks on this list, but after all of our conversations, I come away motivated to do great. We all have high expectations for ourselves and others and I appreciate being able to bounce ideas and learn from all of you!

- Tom Becher – Data Analytics was still not-well-defined when I first reached out about a job. Thanks for taking a chance on first year college student. My time at MITRE helped be build the core of my technical data analytics skills that continue to serve me well today.

- Lindsey and Jean – It might be cliche to include my wife and daughter here, but it’s my post so I’m doing it anyway! 😆 Without Lindsey I wouldn’t have learned to reflect to the degree that enables a post like this to happen. If I want to tie it more directly to career, she’s a big part of why I eat healthy and develop healthy habits that continue to allow me to perform at a high level.

%202019-11-1120%20at%2020.18.43.png)